|

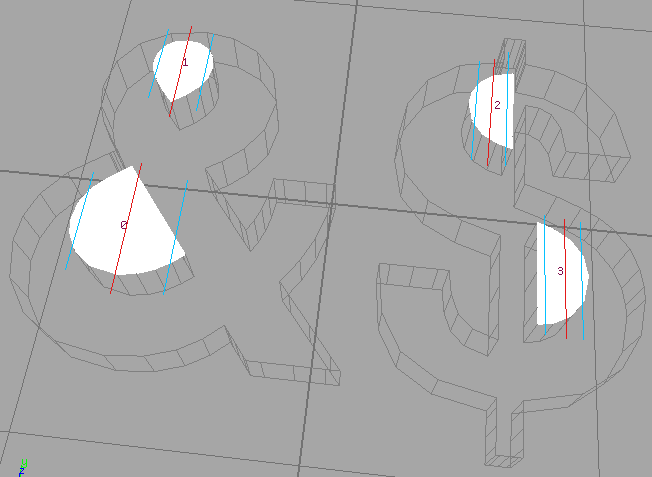

| Slice, bevel each, trim each, join. Simple enough, but where to cut? |

The diagram displays three carefully-selected slice locations: far enough from each other to avoid artifacts, but sufficiently close to overlap with both holes. Can we get away with simpler, automated cuts?

Of course we can. This is Houdini, we can automate anything!

Strategy

I would like to slice one hole at a time, from left to right. Enumerating the holes is surprisingly easy: once you turn off the Extrude SOP's "Hole Polygons" option, columns representing the holes appear! |

| Those "hole columns" sure are convenient now, but I wonder what is their true purpose? |

The next step is to compute knife positions for each hole. We need three slices per hole: one in the middle, and two on the sides.

|

| Each slice crosses its hole, in order to break the surrounding holed polygon into two regular polygons. |

Some of the slices from one hole will cut through other holes, but that's okay. The three manually-placed slices in the ampersand example demonstrates that cutting many holes with one slice is perfectly fine, and having more than three slices per hole will simply divide the same work into a larger number of smaller pieces.

Once we have picked our slice locations, we need to bevel each segment individually, chop off the sides, then merge the results.

|

| Slice everywhere, bevel everywhere, trim everywhere, join. A flawless dollar-making plan! |

Where to draw the lines

Given the location of all the holes, how do we tell Houdini where to perform the slices? Our goal is to generate one point at each of the locations at which we want to slice, like this: |

| Approaching, but not touching, the boundaries of each hole. |

A number of SOP nodes can be used to obtain this arrangement of points, but the simplest is to use Add.

|

| Protip: don't forget to set your expression language to HScript (the black H icon in the upper right) |

The above expressions place the three points at the left, middle, and right ends of the current geometry's bounding box. Of course, if we want those three points to surround a hole, the geometry must be changed to contain nothing but the hole, so the Add SOP needs to be placed inside a ForEach. Also, Add will add the points to a geometry which already contains the hole, so in order to isolate the three new points, the points which define the geometry of the hole must be removed.

Since we are inside a ForEach SOP, the offending points are all vertices of the only polygon in the mesh. It sounds like Delete primitive 0 should do the job, but in Primitive mode, the Delete SOP deletes any point which doesn't belong to any primitive, regardless of the any other options you set. Blast does the same. We must instead work in Point mode, deleting the range 0 to $N-3, in order to keep only our three new points.

Finally, we must scale down each triplet by a small amount, so that the points don't touch the sides of the holes. You might be tempted to use a Transform SOP, but I suggest the Primitive SOP instead: it supports all the typical Transform options, but it also pre-fills the pivot to the centroid of each primitive. This way, scaling down the points will bring them closer to the center of the hole, instead of closer to the origin of the scene. Since we have just used Delete to get rid of our only primitive, we need to place the Primitive SOP before the ForEach node.

A sliding window of slices

Now that we have our slice positions, we can start slicing! The purpose of those slices is to separate the polygons into simple shapes, for which no spurious hole-supporting segments are necessary.By slicing each letter into sufficiently-small pieces (smaller than the holes), we can guarantee that each segment is a regular polygon, that is, a polygon with no holes nor spurious segments. We obtain the pieces using another ForEach, in Number mode this time. Extract each piece by slicing the geometry on the left and right, computing the position of each slice using the positions of the ith and (i+1)st points. The python expression for this is surprisingly long:

i = int(node("..").stampValue("FORVALUE", 0))

p = node("../null1").geometry().points()[i+1]

return p.position()[0]

|

| Each colored polygon is regular. The spurious segments are gone! Hurray! |

In the above image, there was only one slice per hole. This is sufficient to obtain regular polygons, but applying the Extrude and PolyBevel SOPs to each of those polygons is not going to produce an appropriate bevel for the combined shape.

|

| Each piece is perfectly beveled, but the slices are way too visible. |

Instead, a workaround is to cut larger, overlapping pieces, before trimming the needlessly-bevelled sides. To do this, simply create your pieces using the ith and (i+3)rd points, bevel, then trim along the (i+1)st and (i+2)nd points. Effectively, this is a sliding window of size 4, iterating over all slice positions from left to right:

|

| Each piece is now as wide as three of our previous pieces, but we will trim the sides so only the middle section remains. |

However, as the above animation clearly demonstrates, the pieces produced by this strategy are way too large! They are large enough to span multiple holes, causing them to contain those nasty spurious segments which ruin our bevels. To produce narrower pieces, we need to slice more often; this is why, in the previous section, we produced three slice locations per hole instead of one.

Three slices per hole is the ideal number: any fewer, and we risk to contain a hole. With only two slices, for example, the piece which is centered around those two slices ranges from one slice to the left of those, most likely beyond the left edge of the hole, all the way through once slice to the right of the central slices, most likely beyond the right edge of the hole. With three slices, we don't have this problem. Whether the central slices are the first or the last two, one of the two sides of the piece will lie along the third slice, thereby guaranteeing that the hole is cut open.

|

| Left: The regular PolyBevel SOP has issues with holes. Right: Our sliding window approach handles holes gracefully. |

Mysterious vertical lines

Let's try to apply our automated bevelling algorithm to a larger chunk of text. |

| Top: The regular PolyBevel SOP has issues with holes. Right: Our sliding window approach handles holes gra... wait, what are those vertical lines? |

Strangely enough, the algorithm described so far only exhibits its imperfections when applied to text which span more than one line. How is this possible? Let's zoom in.

|

| The line cuts through all the letters at the exact same position. Could it be one of our slices? Or should I say... two of our slices? |

Have you guessed yet? The problem occurs when two holes accidentally align. Then, the slice positions we compute for the two holes also coincide. But then, when the sliding window's third and fourth points coincide, trimming the geometry between those two points has no effect! The needlessly-bevelled side fails to be trimmed, and remains instead embedded inside the result geometry.

|

From left to right (cross-section): slice at points 1 and 4, bevel, slice at points 2 and 3.

Second row: same thing, but points 3 and 4 are close, so the bevel remains visible after the trim.

|

Consolidating points

To avoid the problem highlighted in the previous section, we need to prevent consecutive slices from being to too close to each other. Quick: which Houdini SOP removes points which are too close from each other? |

| The Facet SOP can also make primitives look more flat, remove superfluous points along a curve, correct polygons, compute and/or correct normals, ... |

One slight obstacle is that while our slices are too close along the X axis, the points which represent those slices might be far away along Y or Z.

|

| The slices (in black) are way too close, but the distance between the points (in green) is actually quite large. |

One easy fix is to squash the Y and Z coordinates down to zero, using XForm or Point. The disadvantage of this method is that it's hard to visualize the results, as the points end up far from their corresponding holes.

|

| Can you guess which point corresponds to which hole? Hint: see the previous image. |

Recovering the original position of the points after some of them were removed by Facet is surprisingly easy. Just store the position in a custom attribute!

|

| Another subtlety is that Facet deletes points which are not part of any primitive, so we need to Add then Delete a primitive which goes through all points, i.e. "polygon 0 = *". |

The distance given to the Facet SOP is very important: if the distance is too small, some vertical lines will slip through, while if it is too large, we might miss some of the holes. I used twice the bevel amount, with excellent results:

|

| No imperfections anywhere! Don't believe me? Click to inspect the high-res version. |